Overprovisioning

When does Cluster Autoscaler change the size of a cluster?

Cluster Autoscaler increases the size of the cluster when:

- there are pods that failed to schedule on any of the current nodes due to insufficient resources.

- adding a node similar to the nodes currently present in the cluster would help.

Without overprovisioning

kubectl apply -f my-deployment.yml- Cannot schedule pods due to insufficient resources, deployment fails 💀

- cluster-autoscaler notices and begins to provision new instance

- Wait for instance to be provisioned, boot, join the cluster and become ready 😪

- kube-scheduler will notice there is somewhere to put the pods and will schedule them

- Poor developer experience

- Failed deployments

- Cluster cannot handle any burst traffic

Overprovisioning

Allocating more computer data storage space than is strictly necessary - https://en.wikipedia.org/wiki/Overprovisioning

kubectl apply -f my-deployment.yml- Placeholder pods are evicted, deployment is (almost) immediately succesful 🎉

- Placeholder pods cannot be scheduled due to insufficient resources

- Wait for instance to be provisioned, boot, join the cluster and become ready

- kube-scheduler will notice there is somewhere to put the placeholder pods and will schedule them

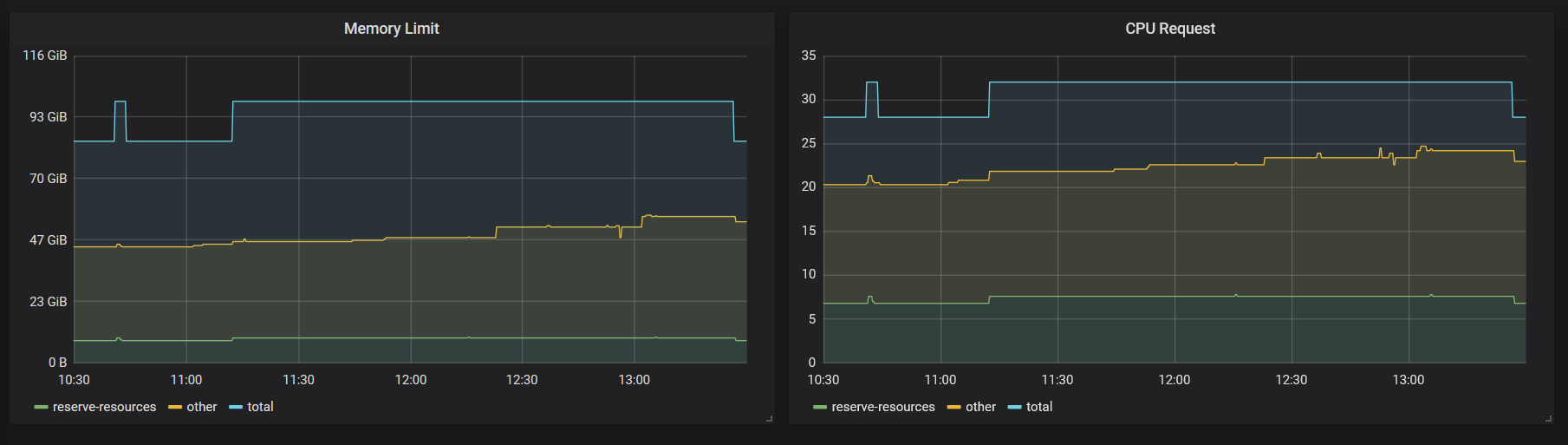

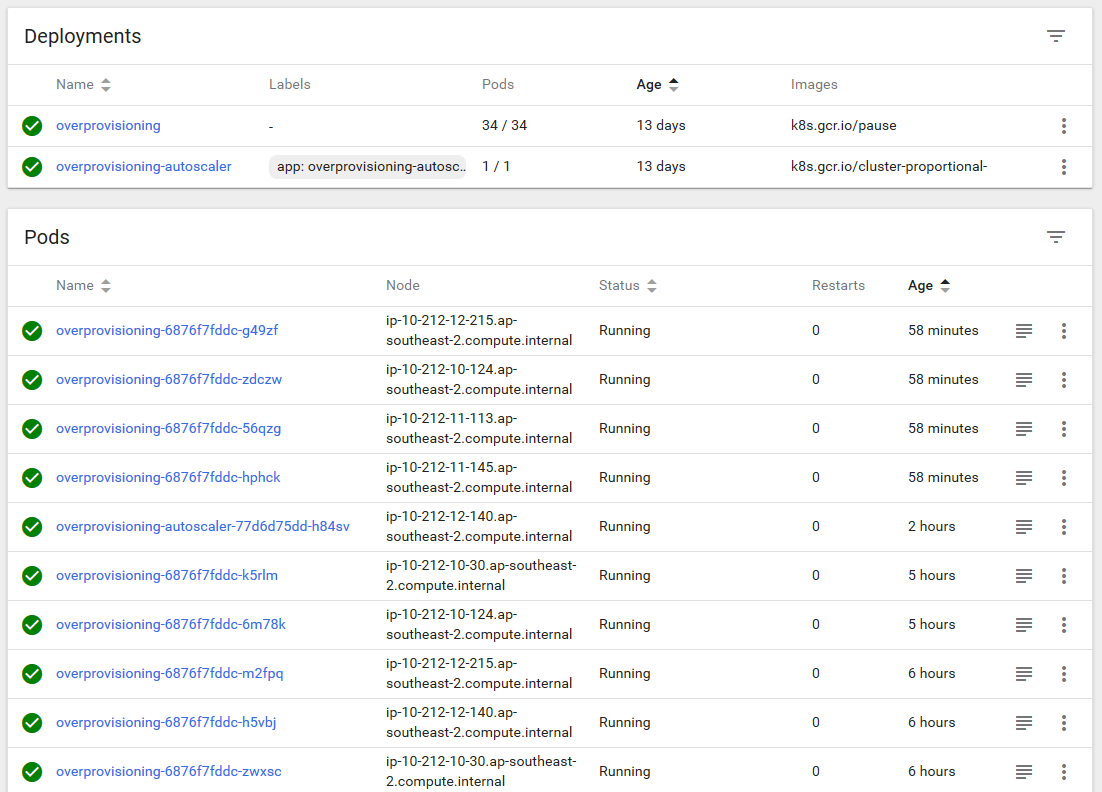

Implementation

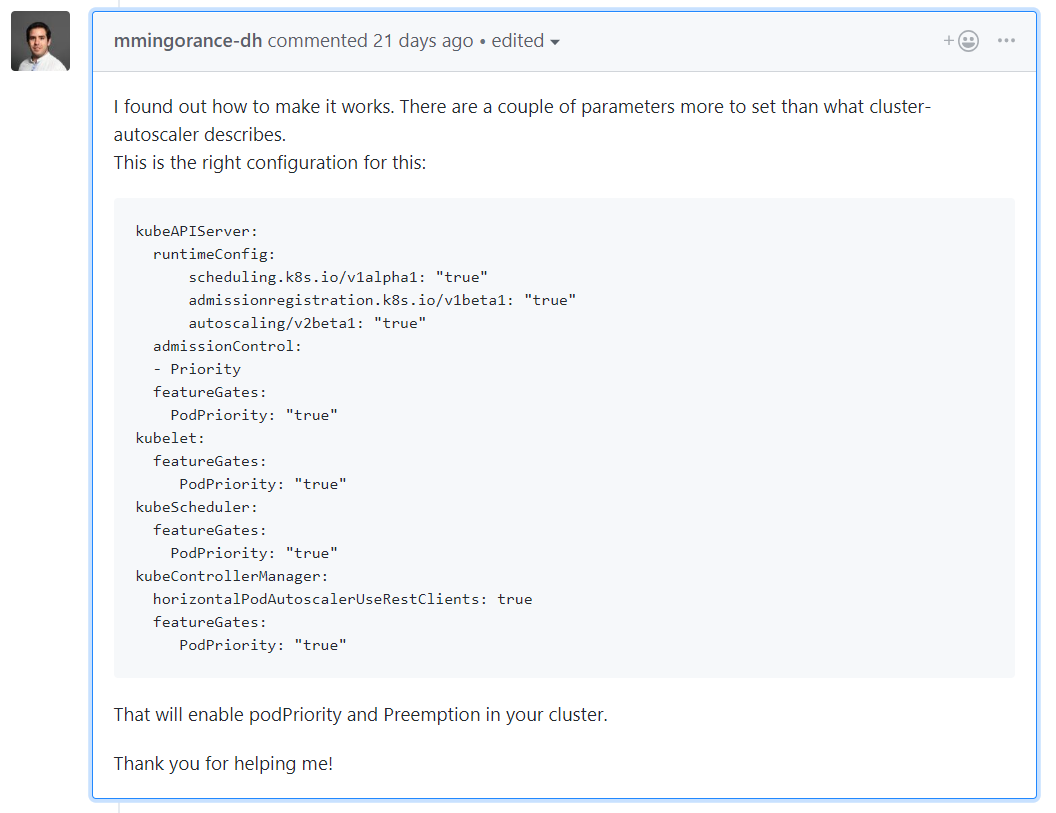

- Enable

PodPriorityandscheduling.k8s.io/v1alpha1 - Create a PriorityClass with a value of

-1 - Deploy placeholder pods and proportional autoscaler

apiVersion: scheduling.k8s.io/v1alpha1

kind: PriorityClass

metadata:

name: overprovisioning

value: -1

globalDefault: false

description: "Priority class used by overprovisioning."

apiVersion: apps/v1

kind: Deployment

metadata:

name: overprovisioning-autoscaler

namespace: default

labels:

app: overprovisioning-autoscaler

spec:

selector:

matchLabels:

app: overprovisioning-autoscaler

replicas: 1

template:

metadata:

labels:

app: overprovisioning-autoscaler

spec:

containers:

- image: k8s.gcr.io/cluster-proportional-autoscaler-amd64:1.1.2

name: autoscaler

command:

- /cluster-proportional-autoscaler

- --namespace=default

- --configmap=overprovisioning-autoscaler

- --default-params={"linear":{"coresPerReplica":1}} # this is the key part

- --target=deployment/overprovisioning

- --logtostderr=true

- --v=2

serviceAccountName: cluster-proportional-autoscaler-service-account

apiVersion: apps/v1

kind: Deployment

metadata:

name: overprovisioning

namespace: default

spec:

replicas: 1

selector:

matchLabels:

run: overprovisioning

template:

metadata:

labels:

run: overprovisioning

spec:

priorityClassName: overprovisioning

containers:

- name: reserve-resources

image: k8s.gcr.io/pause

resources:

requests:

cpu: "200m"

limits:

memory: "256Mi" # not present by default